|

Research Areas: Computer Graphics & Vision

Visual computing defines technologies and applications that integrate computer graphics with computer vision. Computer graphics describes foundations and applications of acquisition, representation, and interaction with the three-dimensional (3D) real and the virtual world, while computer vision allows for a deeper understanding of the real world in the form of two-dimensional (2D) images or video. The Visual Computing Laboratory (VCLAB) at KAIST is particularly interested in visual phenomena related to light transport from a light source to the visual perception in our brain via light traversal over 3D surfaces. The abstracts of our research include the fundamental elements of the real world: light, color, geometry, simulation, and even interaction among these elements. In particular, we are focusing on acquiring material appearance for better color representation in 3D graphics, hyperspectral 3D imaging for a deeper physical understanding of light transport, and color perception in 3D for deeper understanding color. Our contributions in this research allow for various hardware designs and software applications of visual computing. |

| |

| |

| |

|

Computational Imaging:

|

|

|

3D Imaging

We have introduced various end-to-end measurement systems for capturing physically-meaningful information about 3D objects. We have developed many compressive sensing imaging systems to make them suitable for acquiring such data in a hyperspectral range at high spectral and spatial resolution. We also characterize such imaging systems with high accuracy. These imagers have been integrated into a 3D scanning system to enable the measurement of the spectral reflectance for instance. |

|

|

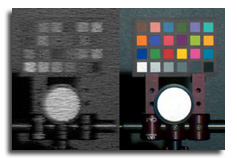

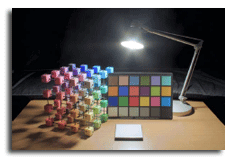

Hyperspectral Imaging

Classical color cameras have focused on mimicking reproduction systems rather than capturing physically-meaningful information about real-world objects and scenes. Motivated by the idea to bridge the gap between high-fidelity color imaging and physically-meaning imaging, we have investigated the foundations and applications of optics, light transport simulation, and computational imaging algorithms. We have built various snapshot hyperspectral imaging systems, pushing the limit of existing imaging systems. |

|

|

High-Dynamic-Range (HDR) Imaging

Digital imaging has become a standard practice but is optimized for plausible visual reproduction of scenes. However, visual reproduction is just one application of digital images. We have introduced various imaging techniques for HDR imaging, allowing us to build physically-meaningful HDR radiance maps to measure real-world radiance. The achieved accuracy of this technique rivals that of a spectroradiometer. |

| |

|

Learning-based Graphics and Vision:

|

|

|

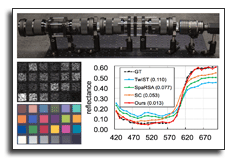

Learning-based Spectral Imaging

We have developed various hyperspectral imaging systems that can provide very high accuracy in reconstructing spectral information from compressive input. We have also built many spatio-spectral compressive imagers, designed with the spectral reconstruction algorithm that can provide high spatial and spectral resolution, overcoming the long-last tradeoff of compressive hyperspectral imaging. |

|

|

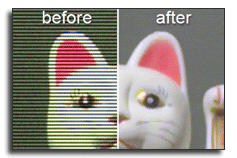

Learning-Based HDR Imaging

We have developed interlaced HDR imaging via joint learning. It jointly solves two traditional problems of deinterlacing and denoising that arise in interlaced video imaging with different exposures. We solve the deinterlacing problem using joint dictionary learning via sparse coding. Since partial information of detail in differently exposed rows is often available via interlacing, we make use of the information to reconstruct details of the extended dynamic range from the interlaced video input. |

| |

|

Visual Perception:

|

|

|

HDR Color Appearance Model

We have developed a novel color appearance model that not only predicts human visual perception but is also directly applicable to HDR imaging. We built a customized display device, which produces high luminance in order to conduct color experiments. The scientific measurements of human color perception from these experiments enable me to derive a color appearance model which can cover the full range of the human visual system. |

|

|

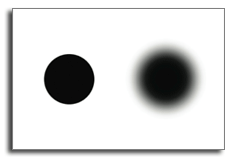

Spatially-Varying Appearance Model

Color perception is recognized to vary with surrounding spatial structure, but the impact of edge smoothness on color has not been studied in color appearance modeling. We have studied the appearance of color under different degrees of edge smoothness. Based on our experimental data, we have developed various computational models that predict such appearance changes. The model can be integrated into existing color appearance models. |

| |

|

Interactive Computer Graphics:

|

|

|

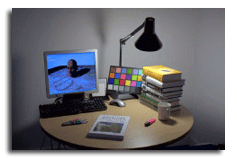

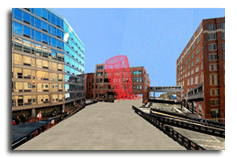

3D Representation of the Real World

The only current methods for representing context involve designing in a heavy-weight computer-aided design system or sketching on a panoramic photo. The former is too cumbersome; the latter is too restrictive in viewpoint and in the handling of occlusions and topography. We have developed various approaches to presenting environmental contexts where computer graphics contents and real objects/scenes can be harmonized seamlessly. |

|

|

Physically-Based Rendering (PBR)

While the high-frequency nature of direct lighting requires accurate visibility, indirect illumination mostly consists of smooth gradations, which tend to mask errors due to incorrect visibility. We have exploited this by approximating visibility for indirect illumination with imperfect shadow maps in conjunction with a global illumination algorithm, enabling indirect illumination of dynamic scenes at real-time frame rates. |

| |

|

|

|

|

© Visual Computing Laboratory, School of Computing, KAIST.

All rights reserved.

|

|

|